Despite the impressive capabilities of remote sensing satellites, one drawback is that they only capture high spatial resolution or high spectral resolution imagery.

The resulting images are either

-

panchromatic with a high spatial resolution but a low spectral resolution; or

-

multispectral with a low spatial resolution but a high spectral resolution.

In short, there is a trade-off between the spatial and spectral resolutions.

So, how do we take advantage of the complementary characteristics of panchromatic and multispectral images?

The answer is image fusion. Specifically, pansharpening.

What is pansharpening? What role does it play in improving satellite imagery? And why is there a resolution trade-off? Let us find out.

What is pansharpening?

Pansharpening (short for panchromatic sharpening) is an image fusion technique that combines a panchromatic image with a multispectral image to yield a high spatial, high spectral resolution image.

In other words, we use panchromatic image details to 'sharpen' the multispectral imagery while simultaneously preserving spectral information.

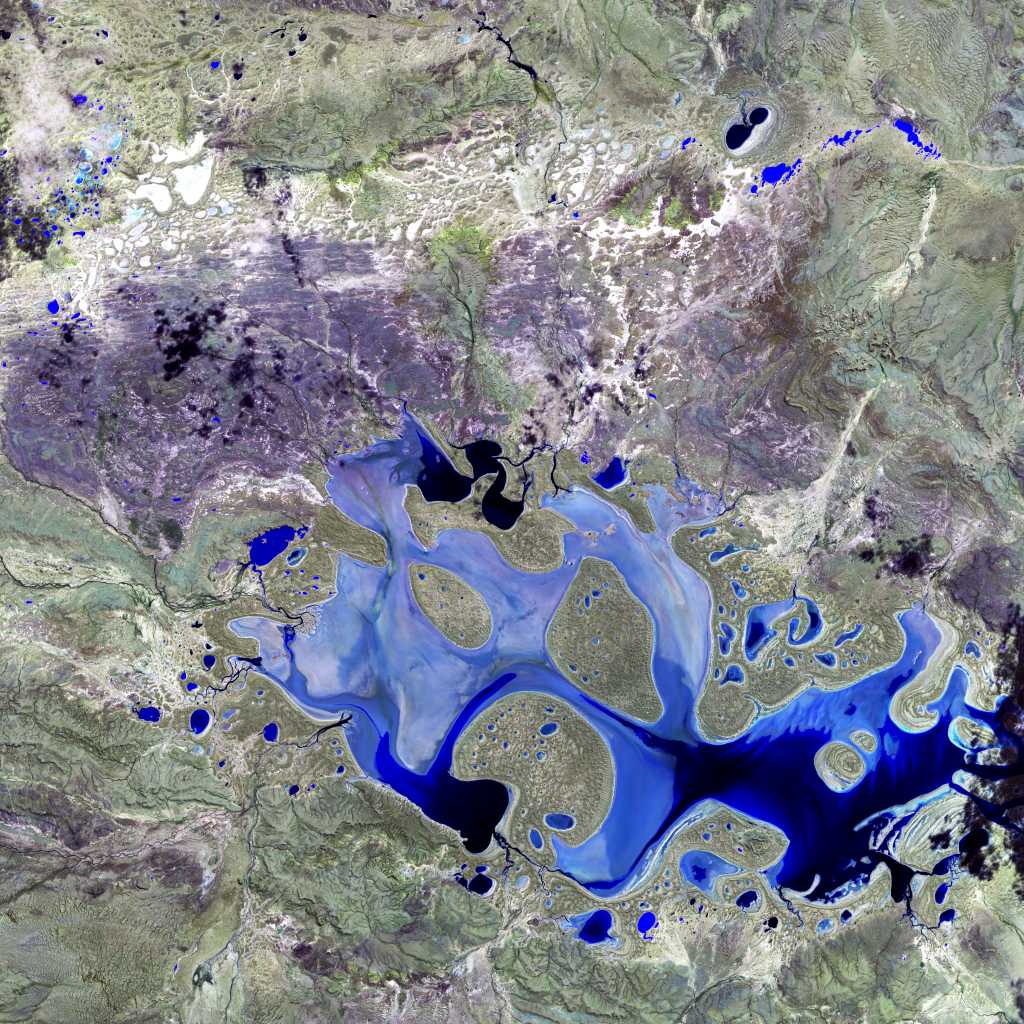

Multispectral image (left) vs. pansharpened image (right)

What is the difference between panchromatic and multispectral imagery?

Panchromatic images are grayscale images captured across the visible (and possibly near-infrared) wavelengths of the electromagnetic spectrum. But multispectral images cover narrow portions of the visible, near-infrared, and shortwave infrared wavelengths of the spectrum.

Earth observation (EO) satellites like Landsat, SPOT, and Pléiades collect multispectral and panchromatic images, facilitating pansharpening.

Which prompts the question:

How do we pansharpen Sentinel-2 imagery since it lacks a panchromatic band?

Here, you can create a synthetic panchromatic band using different methods that take advantage of the 10m resolution bands. For example, some processing methods use the average value of the red, green, blue, and near-infrared bands to obtain a panchromatic band.

What if the spatial resolution of the panchromatic band is below that needed for a specific application?

Integrating images provided by different satellite sensors may be necessary under such circumstances. Take Landsat 8 30m resolution multispectral imagery, for example. Pansharpening the imagery using the Landsat 8 panchromatic band achieves a 15m spatial resolution. Meanwhile, pansharpening using Sentinel-2 achieves a higher spatial resolution of 10m.

Alternatively, you can apply super-resolution deep learning techniques to increase the spatial resolution of multispectral bands beyond that of the available panchromatic band.

Read more about super-resolution.

Why not collect imagery at both high spatial and high spectral resolutions?

We cannot get imagery at both high spatial and spectral resolutions because remote sensing sensors have design constraints. One implication is a trade-off between spatial and spectral resolutions–we can only improve one at the expense of the other.

The design constraints include signal-to-noise ratio and data volume.

1: Signal-to-noise ratio constraints

Optical satellite sensors are designed with a specific signal-to-noise ratio. And so the recorded signal should be higher than the noise to realize a useful image.

To increase the level of energy detected, the sensor can either collect data

-

over a broader spectral range; or

-

over a larger spatial extent (i.e., pixel size).

This is where the spectral-spatial resolution trade-off comes in.

Panchromatic sensors collect the total amount of energy received over a wide spectrum. They, therefore, detect higher amounts of radiation over a smaller spatial extent than multispectral sensors.

Conversely, multispectral sensors divide the total amount of energy received into different spectral ranges. They thus need to sample a larger spatial area to detect the quantity of energy required to meet the signal-to-noise ratio.

Thus, multispectral images have larger pixel sizes (i.e., spatial resolution) than panchromatic images, but more spectral bands (i.e., spectral resolution). Multispectral images usually have a spatial resolution four times lower than the panchromatic image from the same sensor.

Let us look at Pléiades Neo image bands as an example.

| Band | Spectral Range (nm) | Spatial Resolution (m) |

|---|---|---|

| Panchromatic | 450-800 | 0.3 |

| Deep Blue | 400 - 450 | 1.2 |

| Blue | 450 - 520 | 1.2 |

| Green | 530 - 590 | 1.2 |

| Red | 620 - 690 | 1.2 |

| Red-Edge | 700 - 750 | 1.2 |

| Near Infrared | 770 - 880 | 1.2 |

Spectral and spatial resolution of Pléiades Neo image bands

The multispectral bands have a 1.2m spatial resolution which is larger than the 0.3m resolution of the panchromatic band. In contrast, the panchromatic band covers a wider wavelength range as compared to the multispectral bands which cover narrower ranges.

2: Data volume constraints

The collection of high spatial resolution multispectral images would lead to an exponential increase in the volume of satellite data collected–driving up data storage and transmission costs. Therefore, the volume of data collected is balanced against data volume and transmission capabilities, leading to a trade-off.

Why pansharpen? How pansharpening improves your satellite imagery

The purpose of pansharpening is to make satellite imagery sharper and thus easier to interpret.

Pansharpened satellite imagery is more suitable for human and machine perception or further image-processing tasks, such as segmentation, classification, and object detection. Let's see how.

Pansharpening improves visual interpretation of satellite imagery

Visual interpretation and information extraction involve identifying various objects in satellite imagery. Object identification is based on the visual elements of shape, size, texture, color, tone, pattern, shadow, and association.

A higher spatial resolution increases the ground details captured by the imagery, increasing the ability of the sensor to distinguish between closely spaced objects. This aids the identification of smaller objects like vehicles, narrow roads, or even individual trees. Additionally, fine details like shape, size, texture, pattern, etc. are detected, enabling interpretation.

Likewise, a high spectral resolution makes it possible to present a realistic "true color" image that mimics the color perception by the human eye. This makes it possible to identify and discriminate features based on color.

By improving the spatial resolution of multispectral imagery, pansharpening leads to better visual interpretation for both humans and machines.

Pansharpening leads to the following downstream benefits:

-

More accurate labeling of training datasets leading to higher performance of machine learning algorithms

-

Finer evaluation of the results of image segmentation, classification, and feature extraction algorithms

Pansharpening improves automated object detection

In the same vein as visual interpretation, for machines, a higher spatial resolution of multispectral imagery leads to improved performance of object detection algorithms. The reason is that object detection methods not only rely on the spectral signature of the individual pixels but also on the shape of the cluster of pixels with a similar spectral signature.

For example, roads and asphalt paved roofs may have identical spectral signatures but by considering the shape of the cluster of pixels (e.g., long and narrow vs. rectangular), these algorithms can distinguish them.

Pansharpening increases the spatial resolution of multispectral imagery and hence the ability of the object detection algorithms to distinguish between closely spaced features and identify the objects of interest. Application examples include ship, car, and building detection.

Aircraft detection algorithm output

Pansharpening improves image classification

Multispectral imagery narrowly captures specific wavelengths of the electromagnetic spectrum that are sensitive to particular features on the ground or properties of the atmosphere.

Therefore, multispectral imagery enables classification based on spectral differences. The lower spatial resolution of multispectral imagery, however, means that the shape and boundaries of the classified features are not precise.

Using panchromatic imagery for classification is no good either. The low spectral resolution (almost identical gray values) makes it ineffective for separating spectrally homogeneous features. Nevertheless, shapes and boundaries are delineated more accurately owing to the high spatial resolution.

Pansharpened imagery has a high spectral and spatial resolution, enabling the discrimination of land cover types and accurate delimitation of the area occupied by each land cover type, hence improved classification.

Specifically, pansharpened images are valuable when undertaking classification in areas with complex structures like urban areas, heterogeneous forests, or highly subdivided farmlands.

Pansharpening enhances temporal resolution for change detection

Satellites are a valuable tool for change detection. Their consistent revisit schedules facilitate the quantification of changes on a broad scale.

Change detection, however, depends on the satellite's temporal resolution. For instance, the approximate revisit time is 1 day for MODIS, 16 days for Landsat 8, and 5 days for the Sentinel 2 constellation. Shorter revisit times are needed to monitor rapid changes on the Earth's surface, such as deforestation, urbanization, or agricultural activities.

Additionally, higher temporal resolutions increase the chances of obtaining cloud-free satellite imagery.

At the same time, we need a high spatial resolution to identify fine-scale changes, e.g. at the individual tree level. But a low spatial resolution characterizes high temporal resolution imagery. For example, the spatial resolution is 250m-1000m for MODIS imagery, 15m-30m for Landsat 8 imagery, and 10m-60m for Sentinel 2 imagery.

One way to increase the frequency of high spatial resolution imagery is by combining high temporal resolution imagery with high spatial resolution imagery. An example is pansharpening Landsat 8 30m resolution imagery using Sentinel 2 10m data. The fusion helps to:

-

Increase the monthly revisit time to 8 (as compared to 2 for Landsat 8, and 6 for Sentinel 2 constellation) for more frequent monitoring

-

Detect changes at a finer spatial resolution than in the coarser 30m Landsat 8 imagery

Pansharpening, therefore, has the potential to increase the availability of satellite imagery for more frequent, higher spatial resolution monitoring.

Get the best of both worlds: Pansharpen your satellite imagery

Sensor design trade-offs mean that no single sensor offers the optimal spectral, spatial, and temporal resolution.

Multispectral imagery has a low spatial resolution. Panchromatic imagery has a low spectral resolution. And neither is suited to applications that simultaneously require a high spatial and high spectral resolution.

Pansharpened images contain the best of two worlds: high spectral resolution and high spatial resolution. Pansharpening is an important first step in extracting your geospatial insights.

It can be challenging to access satellite imagery and the algorithms that go with analyzing it at scale. But that's changing. With a wide range of satellite data and processing algorithms, pansharpening is now easily accessible on the UP42 platform.