As a general rule, raster-based analysis requires bands at the same resolution. To achieve this, one can downsample all bands to the lowest band resolution, upsample all bands to the higher band resolution using some interpolation method (e.g. bicubic) or upsample to the highest band resolution using a Convolutional Neural Networks (CNN). This last methodology can outperform other upsampling methods and preserve spectral characteristics of the data.

Sentinel-2 (S2) is an Earth observation mission from the Copernicus Programme from ESA that acquires optical imagery at high spatial (maximum 10 m) and temporal resolution (5 days revisit time at the equator) over land and coastal waters. The S2 multispectral instrument (MSI) includes 13 bands, which are captured at different spatial resolutions.

Figure: Characteristics of the multispectral instrument (MSI) on board Sentinel-2 (https://www.mdpi.com/128566)

Figure: Characteristics of the multispectral instrument (MSI) on board Sentinel-2 (https://www.mdpi.com/128566)

S2 Super-resolution creates a 10 m resolution band for all the existing spectral bands with 20 m and 60 m using a trained convolutional neural network. This processing block's output is then a multispectral (12 band), 10 m resolution GeoTIFF file.. The first band (B1) is discarded since it’s only useful for atmospheric correction.

This is especially useful for achieving a high degree of detail with analysis in the lower resolution bands. For instance, computing a water index like NDWI (Normalized Difference Water Index) requires band 8 (NIR) and band 12 (SWIR2), which are in different resolutions (10 m and 60 m, respectively). Using the output of S2 Super-resolution, we get a 10 m NDWI product.

DSen2 model

To perform super-resolution on S2 images, we based our efforts on the work of Lanaras et al. (2018). In this paper, the DSen2 model is introduced. This model is a two-part model, composed of convolutional neural networks that include multiple layers of residual blocks. One model is intended to super-resolve the 20 m resolution imagery to 10 m, while the other model is intended to super resolve the 60 m resolution imagery to 10 m.

Figure: Proposed DSen2 architecture (Lanaras et al. 2018).

Figure: Proposed DSen2 architecture (Lanaras et al. 2018).

We took this methodology the available codebase, and made some modifications to train this model with a new data set and a new data type. In addition, we also converted the current implementation to work with TensorFlow version 2. The code of the block deployed in our platform is also available here.

New data source supported: L2A

The initial implementation of this model supported only level 1 S2 products. These are the top of atmosphere reflectance products. In a lot of situations, the bottom of atmosphere reflectance or level 2 products are required to remove the impact of atmospheric distortions in the imagery. This is especially important when running any time-based analysis, for example, comparing a vegetation index from July 2018 to July 2019.

For this reason, we retrained the DSen2 model with the L2A data set and have achieved a similar level of performance as the original model and paper describe.

{

"aws-s2-l2a:1":{

"ids":null,

"bbox":[

13.352809,

52.485219,

13.392914,

52.515197

],

"limit":1,

"time_series":[

"2018-07-01T00:00:00+00:00/2018-07-31T23:59:59+00:00",

"2019-07-01T00:00:00+00:00/2019-07-31T23:59:59+00:00"

],

"max_cloud_cover":100

},

"s2-superresolution:1":{

"bbox":[

13.352809,

52.485219,

13.392914,

52.515197

],

"contains":null,

"intersects":null,

"clip_to_aoi":true,

"copy_original_bands":true

}

}WARNING: If you set the clip_to_aoi parameter to True make sure your AOI is at least 4 sqkm.

Your result will be an atmospherically corrected, 10 m resolution S2 product, including all bands.

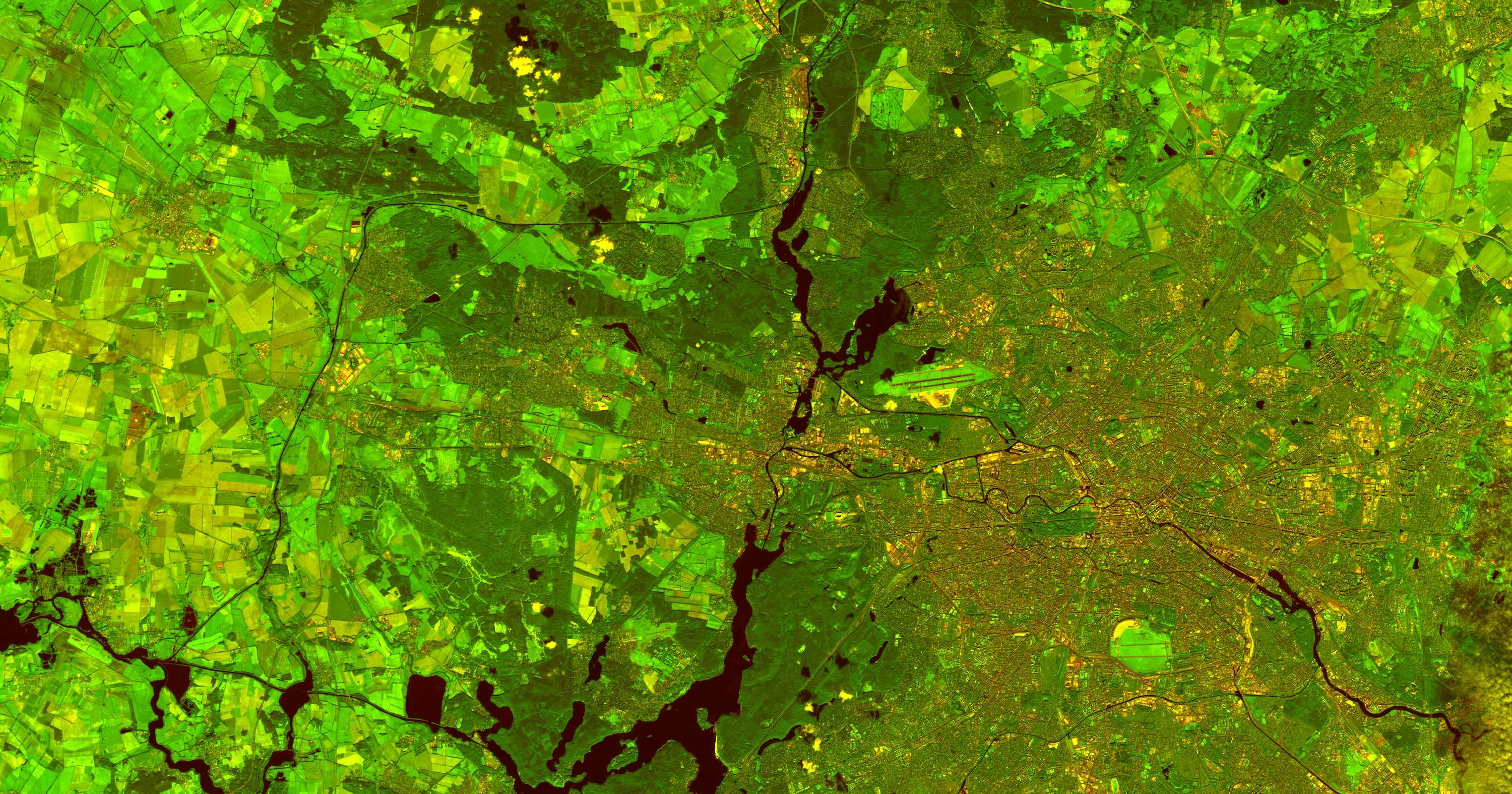

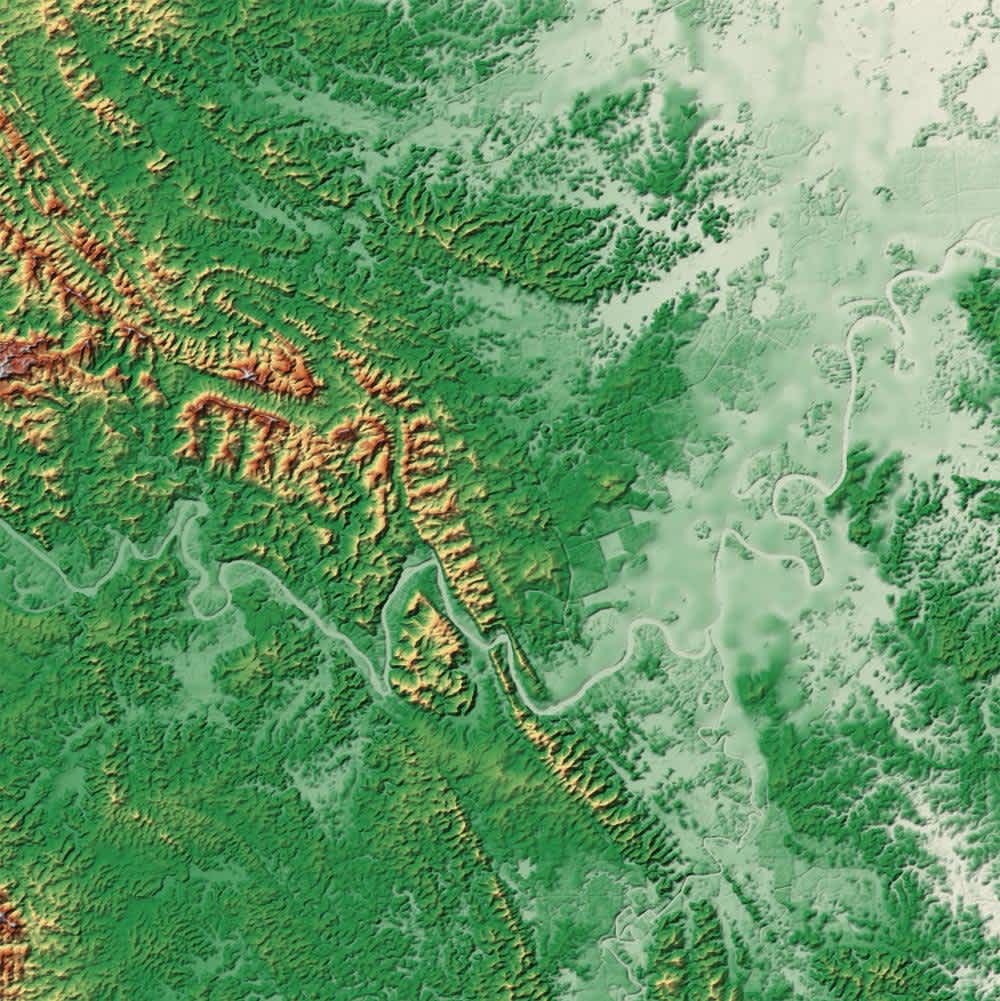

Figure: Left, original B9 and B1, Right, super-resolved B9 and B1 composite, 60 m and 10 m, Tempelhof, Berlin, S2 L2A.

Figure: Left, original B9 and B1, Right, super-resolved B9 and B1 composite, 60 m and 10 m, Tempelhof, Berlin, S2 L2A.